Two minute warning (16 Mar 2006)

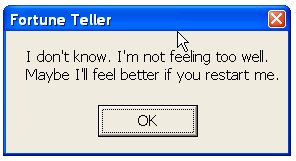

Software is a freaky thing. I still don't know how to explain what it is to my mum and dad. I could tell them about ones and zeroes and how layer upon layer of hardware and software build on each other until they finally form what they see on a computer screen or in a digital camera. But I'm not overly confident I'd be able to bring across how this stuff really works, partially because I hardly think about the inner workings of computer systems anymore. The von Neumann architecture is so deeply engraved into Joe Developer's reasoning and mindset that it becomes an almost subconscious fundament of our daily work. But many of those who use computers every day never really understand how this gadget works. They usually get by because over time the software industry has developed UI metaphors which shield users from the internal complexity. Until something unexpected happens - such as an application crash. Once upon a time in a reality not too far away, a Japanese user started seeing application crashes. The bug report through which we learned about his problems did not really complain about individual bugs or situations in which the crashes occurred. Instead, he requested to add a feature to the software so that it would alert the user before a crash would occur so that he'd have the chance to save his data and exit before the crash actually happened. Now this was not a request to add a top-level exception handler which kicks in when a crash occurs, reports the issue to the user and makes a last-ditch effort to save data in memory. We already had that in the application. No, what the customer really wanted our application to do was to predict that a crash was looming in the near future. My brain starts to hurt whenever I think about this request. After all, a crash is usually caused by a hitherto undetected bug in the code, i.e. by an issue which neither we as the programmers nor the software itself know about. Being able to predict a crash which is due to a bug in our code is more or less equivalent to knowing that the bug exists, where it is located, what the user's next action will be, and whether that course of action would lead him into the "danger zone". I'll ignore the bit about predicting the user's action for a moment; but if either we or the software already knows about the bug, why not simply fix it in the first place rather than ceremonially announcing it to the user? (Did I miss something? Does any of the more recent CPUs have a clairvoyance opcode that we could use? )

It took me only a short while to explain this to our support folks, but then,

they are sufficiently versed with software that they kind of "got it" naturally,

even though most of them do not develop any software. I don't think, however,

that we ever succeeded to communicate this properly to the customer.

)

It took me only a short while to explain this to our support folks, but then,

they are sufficiently versed with software that they kind of "got it" naturally,

even though most of them do not develop any software. I don't think, however,

that we ever succeeded to communicate this properly to the customer.

Maybe I even understand the customer. He was probably thinking he

was kind of generous to us; after all, he was willing to accept that any

kind of software inevitably has some bugs, some of which even cause crashes,

and that there is no practical way of dealing with this other than

using the software and fixing the issues one by one.

But at the very minimum, he wanted to be warned. I mean, how

hard can this be, after all! Even cars alert their drivers if there is a problem

with the car which should be taken care of in a garage as soon as possible.

Most of these problems, however, are not immediately fatal. The car

continues to work for some time - you don't have to stop it right away

and have it toed to the garage, but can drive it there yourself, which is

certainly more convenient.

What seems to be a fairly simple idea to a customer, is a nerve-wrecking

perspective for a developer. There is really no way to predict the

future, not even in a computer program; this is what the halting problem

teaches us. However, what seems obvious to a developer, sounds like a

lame excuse to someone who is not that computer-savvy.

But then, maybe there are ways to monitor the health of software and

the data which it processes, and maybe, based on a lot of heuristics, we

could even translate those observations into warnings for users without

causing too many false alarms...

Maybe I even understand the customer. He was probably thinking he

was kind of generous to us; after all, he was willing to accept that any

kind of software inevitably has some bugs, some of which even cause crashes,

and that there is no practical way of dealing with this other than

using the software and fixing the issues one by one.

But at the very minimum, he wanted to be warned. I mean, how

hard can this be, after all! Even cars alert their drivers if there is a problem

with the car which should be taken care of in a garage as soon as possible.

Most of these problems, however, are not immediately fatal. The car

continues to work for some time - you don't have to stop it right away

and have it toed to the garage, but can drive it there yourself, which is

certainly more convenient.

What seems to be a fairly simple idea to a customer, is a nerve-wrecking

perspective for a developer. There is really no way to predict the

future, not even in a computer program; this is what the halting problem

teaches us. However, what seems obvious to a developer, sounds like a

lame excuse to someone who is not that computer-savvy.

But then, maybe there are ways to monitor the health of software and

the data which it processes, and maybe, based on a lot of heuristics, we

could even translate those observations into warnings for users without

causing too many false alarms...

to top

Edit | Attach image or document | Printable version | Raw text | Refresh | More topic actions

Revisions: | r1.3 | > | r1.2 | > | r1.1 | Total page history | Backlinks

Revisions: | r1.3 | > | r1.2 | > | r1.1 | Total page history | Backlinks

Blog

Blog